Learning about impact: An open letter from Hewlett’s Evidence-Informed Policy team

The Evidence-Informed Policy team recently shared this letter with our grantee partners. We know that other funders and implementers are also grappling with how to learn about impact, so we’re sharing this letter openly. If you have feedback on our approach or other ideas you’d like to share, please email us at HewlettEIP@hewlett.org.

Dear evidence-informed policy partners,

We miss you! With continued lockdowns and travel restrictions, we are missing the more casual, extended conversations we have when we see you in person. There is so much we would like to talk about! Top on our minds is hearing more about your impact, told in the messy, complicated ways that are true to the policy process but harder to convey in reports or Zoom calls.

With this letter, we invite you to share what you are learning (and questioning) about the impact of your important work. The next time we talk, or you write a report or blog, we invite you to not only tell us what you’re most proud of, but why it is significant and based on your knowledge of the context, what might happen next. We think that this approach could help us learn more together about the full impact of your work, even after COVID ends.

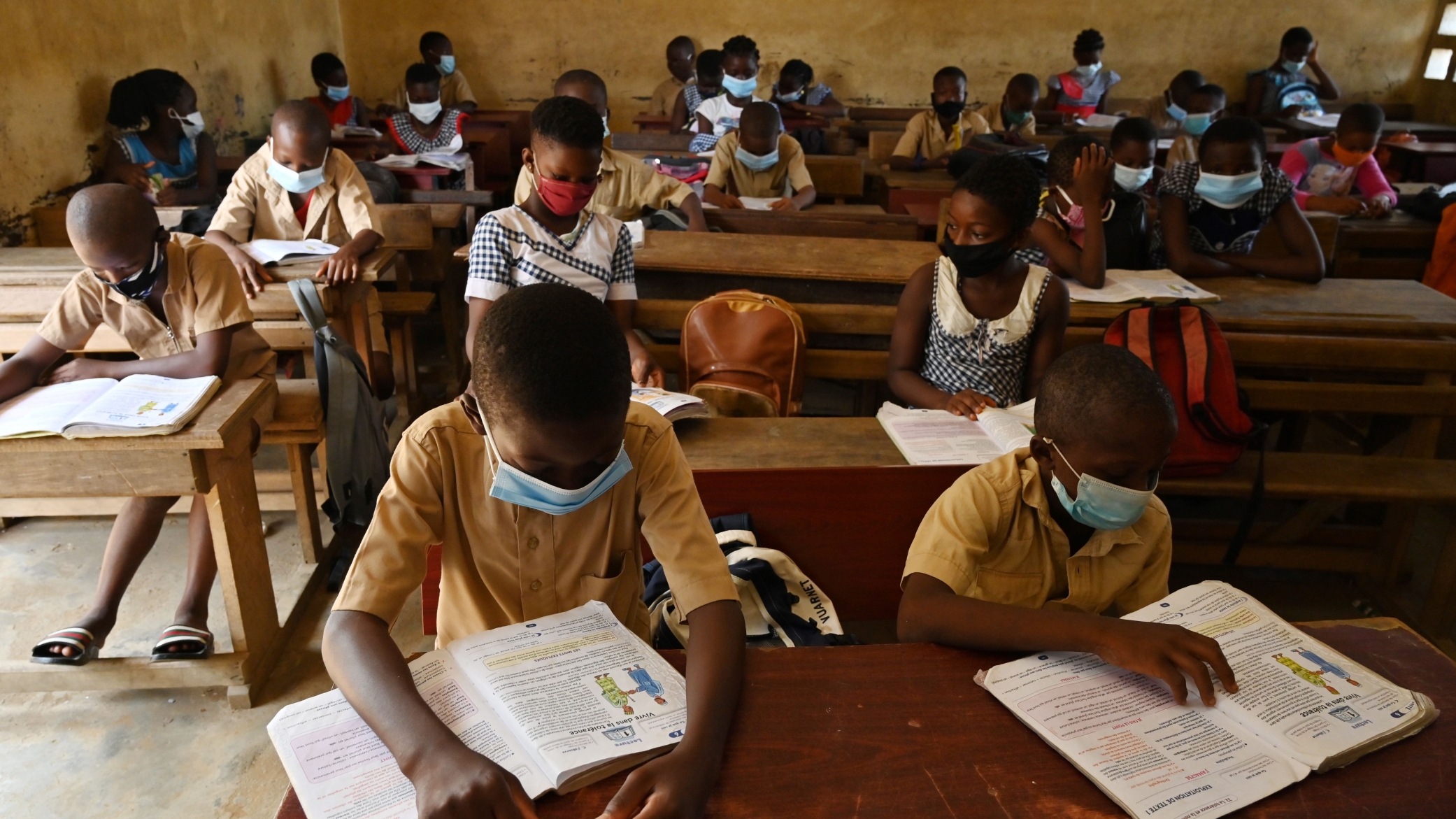

To start off, thank you for joining us in pursuing an ambitious goal—that governments systematically use evidence to improve their social and economic policies over time, enhancing the well-being for people living in East and West Africa.

We hold this ambitious goal as a guiding light, yet we know that like us, many of you focus on more upstream outcomes, such as building governments’ capacity and motivation to use evidence or conducting policy research. We have partnered with you because we see you contributing to one or more of three outcomes in our EIP strategy that are key steps along the (admittedly circuitous) path toward this goal:

- policy changes that can ultimately lead to improvements in people’s lives,

- changes in government systems that promote ongoing evidence use, and,

- a stronger evidence-informed policy field that advances evidence use.

You probably share one or more of these aspirations, although you might use different words to describe it.

We hope and expect that each of our three strategy outcomes ultimately contributes to meaningful and sustained improvements in government decisions and people’s lives, though in different ways and on quite different time horizons. Given that the road toward impact has many steps and isn’t linear, how can we tell we are on the right track? In part by answering two questions:

1) What successes have we already had toward achieving our outcomes and ultimate goal?

2) What did (or might) happen next?

On the first question—What are you most proud of?

We are eager to hear about achievements across the three outcomes above, especially those that help illustrate how this upstream work can plausibly contribute to our downstream ambitions of improving people’s well-being, which is beyond our or your direct control. We’d like to hear what you are especially proud of, even if the successes are not recent and even if they aren’t funded by Hewlett grants.

Sharing examples of your most successful work isn’t just for us. It can motivate the field and help us learn tactical lessons. It may also help us engage people who aren’t yet evidence champions by demonstrating what’s possible.

On to the second question—What might happen next?

Many of you tell us about impressive outputs and engagement efforts. We hear about your publications, your ongoing collaborations with government counterparts, and about gaining access to senior government leaders. This kind of positioning is impressive and pays off, both for a particular line of work and in future efforts.

We’d like to hear more about what happened (or could happen next), and to what extent your work is on track toward your—and our—three outcomes and ultimate goal of contributing to improvements in people’s well-being. This includes taking stock at the end of a grant reporting period on what’s happened so far; why this is (or might be) significant; and what you think could happen next on the path to downstream impact, given your knowledge of the context, actors and institutions. We’re interested in both what might occur due to your own work, and also what might happen resulting from external changes or the actions of others you don’t control or even influence. We’d like to hear about this for a handful of your highest impact examples and/or are those from which you’ve learned the most.

The table below illustrates what this might look like. These are illustrative examples, based on the kind of work our partners do. As you can see, we encourage you to be candid.

Primary strategy outcome |

What happened at the end of the reporting period? |

Why is this significant or high potential? |

What do you think will happen next? Will you be able to learn more? |

| Policy change | Senior member of the Ministry of Agriculture told us they plan to present our evidence about how to improve farmer extension programs to the minister. Our research suggests that if the ministry tweaks the program, participant farm yields could increase 20%. | Our contact is one of the minister’s close advisors.

The changes to the program don’t cost extra and are easy to implement. |

There’s a good chance that the minister will adopt our recommendations. But district offices implement national guidance inconsistently. If the minister adopts our recommendations, we expect that some but not all districts will implement the changes. In districts that do, we expect that yields will increase, likely at close to 20%.

We will continue to be available to our contacts, and they will tell us what the ministers decide. We don’t expect deeper engagement given who we have access to and funding constraints. |

| Policy change | The Ministry of Education incorporated our advice on how to reopen schools safely into official guidance and has told districts they can reallocate staff to help implement it. | The Ministry is requiring all schools to incorporate this guidance and is actively discussing them with district-level officials.

In the COVID context, we are seeing more demand for evidence about schooling and education approaches in general. So this case of using evidence to inform school re-opening is a hopeful signal of a changing trend toward more reliance on data and evidence within the Ministry. |

We expect that some of our recommendations will be implemented, particularly in 15% of districts where we are able to provide advisory services. We are seeing growing demand for these services. We expect fewer cases of COVID in schools that adopt our recommendations, even if schools only implement the recommendations that don’t require shifting staff roles.

Others of the recommendations require allocating staff differently, and it’s unclear if districts will choose to do this. We will attempt to assess this in districts where we provide advisory services. |

| Institutional change | Helped the Ministry of Health build age- and sex-disaggregated data into a database to track COVID vaccinations. | We hope this will help the government identify and prioritize the elderly, and identify whether there are gender disparities in vaccine rollout.

Our government counterparts told us that they feel more equipped to track age- and sex-disaggregated data in future work. |

We worked almost entirely with the research department. They seem committed to promoting data use, but we have little information about whether decision-makers and implementers will use these data. We are trying to increase the chances that they do by supporting the research department staff with their communications. We expect to get informal, qualitative information from the research department on how decision-makers and implementers respond.

We are very optimistic that the research department is interested in tracking age- and sex-disaggregated data going forward, and has at least some greater capacity to do so. They have started to ask us for advice on how they can independently do this work in other projects. |

| Institutional change | Helped the Ministry of Finance develop and roll out training programs on using evidence in budgeting. | We conducted pre- and post-tests during the training, and participants’ scores increased 30%.

Our organization received six requests for support in interpreting and applying evidence from the ministry in the two months following the training; usually, we get only one request a month. Additionally, in the course of working with the Ministry to design the training, we built stronger ties with longstanding staff. Based on what we learned from these strengthened connections, we changed our research agenda to more closely align with the ministry’s priorities. |

We will ask training participants to take another assessment after three months. We expect a low response rate, and we will prioritize maintaining strong relationships over a higher response rate.

Our best guess is that the training is most effective at sparking interest and helping us build relationships, and can open the door for ongoing relationships that help us build capacity and ultimately enable evidence use. We will continue to track qualitatively how the training program contributes to our relationships with the ministry, and any associated changes in the ministry’s work. |

| Field change | Developed a new approach to incorporating data about costs into program evaluations and promoted it at four major conferences. | Leaders of the field were involved in developing the standard and have told us they plan to promote it. Experts vigorously debated the standard. | We expect several leading scholars to incorporate the standard in the coming years; there’s a small chance they will help make it standard practice. We are reaching out to journal editors as well, and if any of them become champions, that increases the chances of this practice spreading. |

| Field change | Convened a community of practice of policy research organizations working with Ghana’s Ministry of Education, to coordinate efforts and support peer learning on government engagement | Many data and research organizations work with this ministry, but we are not always aware of each other’s efforts and have rarely coordinated. As a result, multiple organizations sometimes work with the ministry on the same or a related problem, and ministry officials don’t have time to play a coordination role. | 70% of participants reported that the group led to at least one substantial change in their engagement with the ministry (e.g. coordinating efforts; presenting more compelling data visuals).

Several participants are approaching funders about refining their plans to avoid duplicating others’ work. We don’t yet know if the funders will agree. |

Articulating “what happened next” as in the table above—in your reports, communications, and conversations with us and with each other—won’t definitively tell us whether governments systematically use evidence to improve social and economic policies over time, and in so doing, improve well-being for people living in East and West Africa. Still, we hope and expect it will give us a much better sense of whether and how we are all on track toward that goal. Thinking about what could plausibly “happen next” could also help clarify our collective thinking about the factors that help or hinder progress along that pathway even if these factors are largely out of our control. It will help us at Hewlett stay grounded in the realities of your work, and learn how we can better support our grantee partners, individually and collectively.

We recognize this approach isn’t easy, so we also invite you to share what barriers or questions you face to understanding the potential downstream impact, and whether you find this approach helpful.

Thank you again! We’re excited to learn more from and with you.